Qiqi GAO

高奇琦

About the author Qiqi Gao serves as the Director of the Artificial Intelligence and Big Data Index Research Institute and the Institute of Political Science at East China University of Political Science and Law. He holds the position of Professor and acts as a Doctoral Supervisor. He is also a distinguished scholar who has been selected as a Young Scholar under the National Talent Program and is the Chief Expert of a Major Project of the National Social Science Fund. Additionally, he is a member of the National Committee for Governance of the New Generation of Artificial Intelligence.

He is also the founder of three major index projects including the Global Governance Index (SPIGG), the National Governance Index (NGI), and the China Corporate Social Responsibility Index (CICSR).

关于作者高奇琦任华东政法大学人工智能与大数据指数研究院院长、政治学研究院院长,教授,博士生导师。国家级人才计划青年学者入选者。国家社科基金重大专项首席专家。国家新一代人工智能治理专委会委员。主要社会职务:中国通信学会网络空间安全战略与法律委员会副主任委员、上海国家新一代人工智能创新发展试验区专家咨询委员会委员、上海市大数据社会应用研究会副会长、上海市法学会人工智能法治研究会副会长、上海市美国学会副会长、上海市自然辩证法学会副会长。

他创立和主持具有国内外声誉的三大指数项目:全球治理指数(SPIGG)、国家治理指数(NGI)、中国企业社会责任指数(CICSR)。

The following excerpt is a translation of Gao Qiqi’s article “Artificial Intelligence, the Fourth Industrial Revolution, and the International Political Economy Landscape” (高奇琦:人工智能、四次工业革命与国际政治经济格局).

▶ Cite Our TranslationConcordia AI. “Qiqi Gao — Chinese Perspectives on AI Safety.” Chineseperspectives.ai, 29 Mar. 2024, chineseperspectives.ai/Qiqi-Gao.

▶ Cite This Work

高奇琦(2019). 人工智能、四次工业革命与国际政治经济格局. 当代世界与社会主义(06),12-19.

Translation

In the era of intelligence, it is necessary to form a holistic consideration of artificial intelligence’s (AI) future direction from a global perspective. The reasons include the following four points.

In the era of intelligence, it is necessary to form a holistic consideration of artificial intelligence’s (AI) future direction from a global perspective. The reasons include the following four points.

原文

在智能时代,需要在全球层面形成对人工智能未来发展方向的整体思考。原因包括如下三点。1

在智能时代,需要在全球层面形成对人工智能未来发展方向的整体思考。原因包括如下三点。1

First, AI could accelerate the advent of a risk-prone society. For instance, AI might be used in various illicit industries. In a sense, the speed at which AI is applied in illicit industries could surpass the speed at which it is applied in legitimate ones. Tempted by significant economic benefits, AI could be used more recklessly in non-compliant areas, posing severe risks.

第一,人工智能可能会加快风险社会的来临。如人工智能可能被用于一些黑色产业。从某种意义上说,人工智能用于黑色产业的速度要比白色产业更快。在巨大经济利益的诱惑下,可以更加肆无忌惮地将人工智能用在一些不合规的领域,产生严重的风险。

Second, military applications of AI might escalate military competition among countries. AI development in the United States was initially driven by the military. The US military hopes to utilize AI on the battlefield, and there are already such deployments, such as extensive use of drones. However, this not only increases the gap between the US military and the militaries of other countries, but also poses significant ethical concerns. Decision-making by machines in military operations could become a convenient scapegoat for the US military to sidestep accountability. For example, in the event of a military drone attacking civilians, the US military might shift the blame onto the machine to avoid responsibility.

第二,人工智能在军事中的应用可能会加剧各国的军事竞争。美国在开发人工智能时,最初就是由军方推动的。美国军方希望将人工智能用于战场,并且目前已经有相关的部署,例如大量的无人机被应用。但是这一方面增加了美军与其他国家军事力量之间的差距,另一方面还会产生巨大的伦理问题。机器在军事行动中的决策,可能成为美军推卸责任的借口,例如在出现军事无人机攻击平民事件之后,美国军方将决策的责任归为机器,从而逃避责任。

Third, as a disruptive technology, the impact of AI on human society will spill over to other countries. For example, the unemployment risks triggered by AI development are likely to have a global impact. If the risk of widespread unemployment spreads across the world, it will lead to serious societal problems. At the same time, the unemployment risks will also exacerbate the wave of anti-immigration sentiment, a trend that has already manifested in Europe and America. Therefore, it's critical for sovereign nations to collaborate in thinking about these challenges, creating a cohesive plan for AI development.

第三,作为颠覆性的技术,人工智能对人类社会产生的影响会向其他国家外溢。如由于人工智能的发展引发的失业风险很可能会产生全球性的影响。如果大面积的失业风险在全世界蔓延,会引发严重的社会问题。同时,失业风险还会加剧反移民的浪潮,在欧美已经出现了这样的趋势。因此,主权国家需要联合起来思考这些问题,共同对人工智能的发展进行整体性的规划。

Fourth, countries should reach a consensus regarding the question of artificial general intelligence (AGI) development. At present, most Western countries encourage further development of AGI, as the Asilomar Principles are not opposed to AGI development. However, the ultimate development of AGI poses significant challenges to the meaning of human existence. If the development of AGI were to cause humanity to lose its meaning, such an outcome would be difficult for humanity to accept. Therefore, there is a pressing need for all countries to come together to reach a basic consensus on the direction of AGI development.

第四,在通用人工智能的研发问题上,各国应该达成共识。目前,西方国家大多鼓励进一步发展通用人工智能,如阿西洛马原则也并不反对通用人工智能的发展。但是,通用人工智能的最终发展很可能会对人类存在的意义产生巨大的挑战。如果通用人工智能的发展最终导致人类失去存在的意义,那么这将是人类所难以接受的。这需要各国联合起来,达成对通用人工智能发展方向的基本共识。

The following is from a speech Professor Gao gave in English at the International AI Cooperation and Governance Forum 2023. We have edited the speech for clarity, but the content remains the same. We have additionally translated this speech into Chinese.

▶ Cite Our TranslationConcordia AI. “Qiqi Gao — Chinese Perspectives on AI Safety.” Chineseperspectives.ai, 29 Mar. 2024, chineseperspectives.ai/Qiqi-Gao.

▶ Cite This Work

Qiqi Gao(2023-12-9). “Consensus Governance of LLMs from the perspective of Intersubjectivity”. Speech at the International AI Cooperation and Governance Forum 2023. https://aisafetychina.substack.com/p/concordia-ai-at-the-international

Original speech

Thank you. I'm Gao Qiqi, and I'm from East China University of Political Science and Law. I'm trying to use the concept of consensus governance of big models.

Chinese Translation

谢谢,我是高奇琦,来自华东政法大学,今天我试图探讨大型模型共识治理的概念。

Part 0 Introduction

We need large language model governance, right? How to govern LLMs. I think there are a lot of technical discussions here. I think the academic community focuses more on technical issues. There are a lot of discussions about topics like specification gaming and reward hijacking. I think this is the most important topic.

Part. 0 引言

显然,我们需要对大语言模型进行治理。那么我们该如何治理大模型呢?我认为这里有很多技术治理讨论,学术界更关注这些技术问题,例如有很多关于规范博弈(specification gaming)、奖励破解(reward hacking)或其他方面的讨论。我认为这是一个非常重要的话题。

For example, there is a lot of discussion about mechanistic interpretability. There's a lot of discussion about advancements here. You can see this in interviews and literature about linear classifiers abroad.

例如,关于机制可解释性(mechanistic interpretability),肯定有很多讨论,这是一些这个主题上的文献。

I don’t have enough time to go into all the details, but you can see all these advancements. So it's partly about global interpretability or local interpretability.

没有足够的时间去详细展开介绍,你可以看到可解释性相关的这些进展。

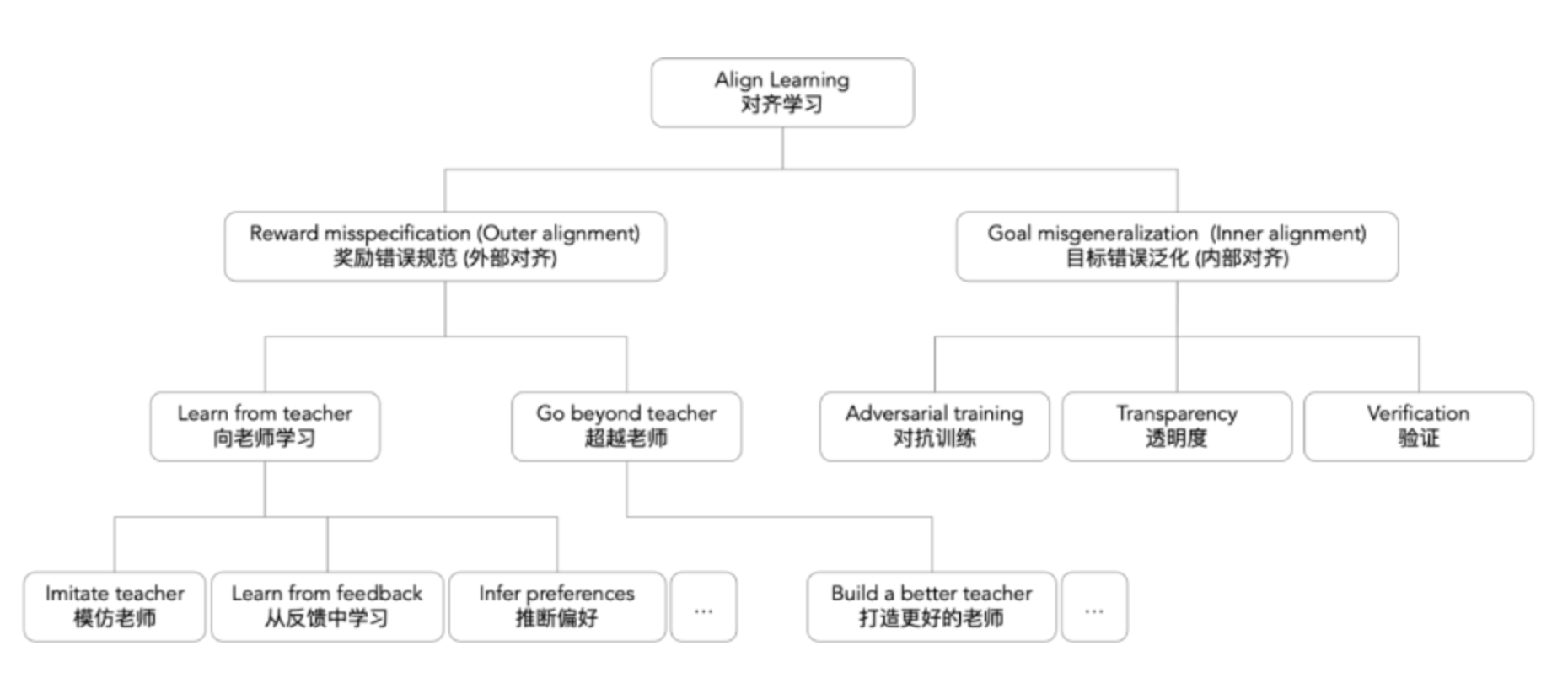

How to deal with it? There is also a lot of discussion about scalable oversight, about different directions such as least-to-most prompting, AI safety via debate, separating different missions of AI safety. Michael [Sellitto] has already proposed imitating the teacher, bio-behavior cloning, the three Hs [helpful, honest, and harmless], and there has been a lot of other discussion here.

关于可扩展监督(scalable oversight),也有很多关于不同方向的讨论,区分出了关于AI安全的许多不同的任务,比如模仿老师、行为克隆、HHH以及其他很多的讨论。

Paul Christino - AI alignment landscape

Paul Christiano has also talked about this landscape.

我喜欢Paul Christiano对AI对齐问题的分解框架。

What I'm trying to say is that alignment is very important, but if we equate governance with alignment, it might lead to misunderstanding. I think that alignment is an internal solution for the enterprise. I call it the placebo patch for LLM application. Without a patch, the scientist or enterprise will not be at ease. A lot of researchers have talked about this, that it is an impossible mission, a process, not a result. So, my question is, how to understand LLM governance deeply?

我想说的是,对齐非常重要,但是如果我们将治理等价于对齐,就可能带来很多误解。我认为对齐是企业的内部解决方案,是一种对大模型的修补。如果没有修补,科学家或企业就无法对大模型感到安心。很多研究人员认为这是一个不可能完成的任务,是一个过程,而不是一个结果。所以我的问题是,如何深入进行大语言模型治理并设定时间表。

I'm trying to separate my presentation into several questions. First, I will give you guys my understanding of this governance picture. Second, I will talk about corporate governance, then national governance, and global governance. Finally, I will discuss consensus governance.

我会把我的演讲分成几个部分:首先是我对这个治理图景的立场,其次是企业治理、国家治理以及全球治理,最后是共识治理。

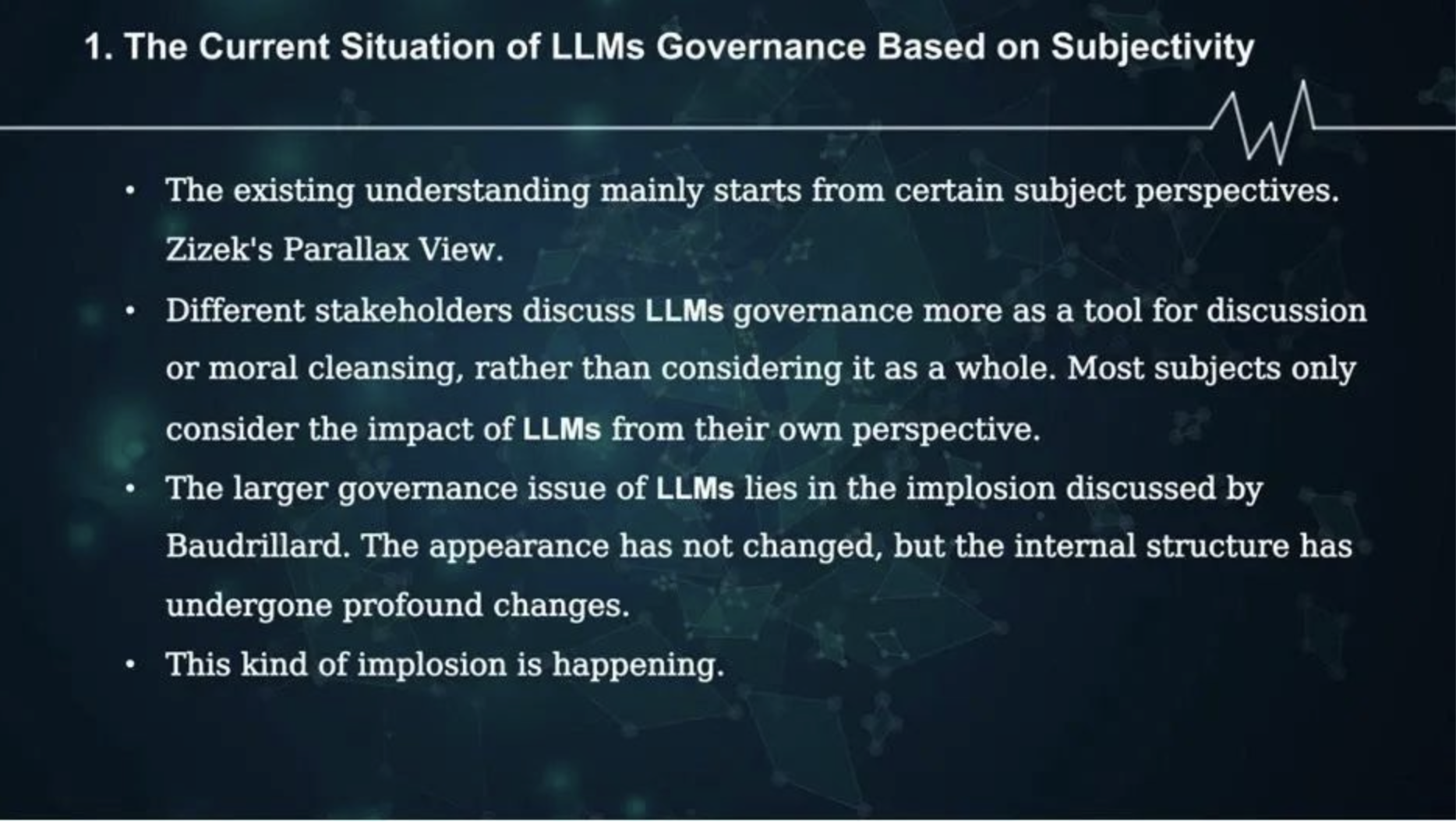

Part 1 The Current Situation of LLMs Governance Based on Subjectivity

Let me first talk about the picture right now. I think the discussion about LLM governance is mostly fragmented. Different stakeholders discuss in different directions and with different interests. But the larger governance issue is that I think LLMs is an implosion, not an explosion. We compare the effect of language models to a nuclear explosion, but I think the effect of LLMs is more like an implosion as discussed by Baudrillard. You don't fear it because there are not changes in appearance, but it has already changed fundamentally.

Part.1 基于主体性的大语言模型治理现状

首先我认为目前关于这种治理的讨论都在不同的方向、不同的利益中进行,是一场不同利益相关方之间的碎片化的讨论。但更大的治理问题是,用我的说法,我认为大模型是一种“内爆”(implosion)而不是我们一般所说的“爆炸”(explosion)。我们常把大模型的出现比作核爆炸,但实际上两者有根本性的不同。

Part 2 Corporate Governance: Power Struggle and Governance Structure

I see this power struggle as a part of corporate governance. The first one is internal governance. We already see in enterprises this bigger power struggle in OpenAI. I think that is a conflict between the non-profit and for-profit structures. I think Ilya [Sutskever] is resisting as a non-profit representative. But, it has returned to the rule of effective accelerationism. I think you also can feel the penetration of capital into the non-profit structure.

Part.2 企业治理:权力斗争与治理结构

因此,我们已经看到了企业治理。我们看到了这种权力斗争,就像它是治理的一部分。首先是内部治理。我们已经在OpenAI看到了一场权力斗争,我认为这是非营利和营利之间的冲突。最终,我认为Ilya是非营利路线的一种抵抗,但OpenAI已回归到有效加速的规则。在这方面,我认为你们也可以感受到资本渗透到非营利架构中。

A big thing that we have already talked about is Q* [Q-star]. What is Q*? There is a lot of discussion of “Tree of Thoughts,” or “process supervision,” or maybe synthetic data–such as Orca, which is close with Microsoft. We're not sure, but I think that might be a big thing for us to see the future of AGI.There is also a corporate competition as inter-corporate governance. So, as we talk about open-source or closed source approaches, I think the advantage of open source is democratization and equalization of power. It gives everyone an opportunity to participate. It’s a weapon of the weak. The disadvantage is the security issues, and there's a dual contradiction between insufficient competition and disorderly competition. So it's very hard to define.

我们已经讨论过的一个重要事项是Q*(Q-星)。Q*是什么?围绕“思想树”、“过程监督”或可能是合成数据——比如与微软紧密相关的Orca,有很多讨论。我们不能确定,但我认为那可能是我们会看到的一个重大问题。未来的AGI,也存在企业之间的治理竞争。因此,当我们讨论是否开源时,我认为开源的优势是权力的民主化和平等化。开源使每个人都有机会接触AI,这是一种弱者的武器,然而开源也会带来安全问题。因此,在处理安全问题时,在不充分竞争和无序竞争之间的存在双重矛盾。

But I think for corporate governance, we should have measures before deployment and after deployment. Before deployment, there are several discussions about gradual scaling, staged deployment, and Chief Risk Officer, internal auditing, red-teaming, etc. For after deployment, I think there must be some risk management, reporting safety incidents, and no unsafe open sourcing.

So I think there must be three elements in corporate governance:

1. Introducing big model governance into corporate governance architecture. This might be a substantive architecture rather than a formal architecture.

2. Ensure that a corresponding proportion of computing power and talent resources are used in governance.

3. Develop corresponding best practices as Brian’s team [Concordia AI] has done, and ensure governance rules by mutually reinforcing workflows through open industry associations or enterprise alliances.

So I think there must be three elements in corporate governance:

1. Introducing big model governance into corporate governance architecture. This might be a substantive architecture rather than a formal architecture.

2. Ensure that a corresponding proportion of computing power and talent resources are used in governance.

3. Develop corresponding best practices as Brian’s team [Concordia AI] has done, and ensure governance rules by mutually reinforcing workflows through open industry associations or enterprise alliances.

但我认为,对于企业治理来说,我认为我们应该在部署前和部署后都采取一些举措。在部署前,有关于逐级扩展、分阶段部署、首席安全官、内部涉及、红队测试等的讨论。在部署后,我认为必须要有一些风险管理、安全事件报告和不进行不安全的开源。

我认为公司治理一定要有这样三个要素:

一是把大模型治理引入到公司治理的架构当中,需要一个实质性的架构,而不是形式性的架构;

二是确保有相应比例的计算能力和人才用在治理上;

三是制定相应的最佳实践并确保治理规则。

我认为公司治理一定要有这样三个要素:

一是把大模型治理引入到公司治理的架构当中,需要一个实质性的架构,而不是形式性的架构;

二是确保有相应比例的计算能力和人才用在治理上;

三是制定相应的最佳实践并确保治理规则。

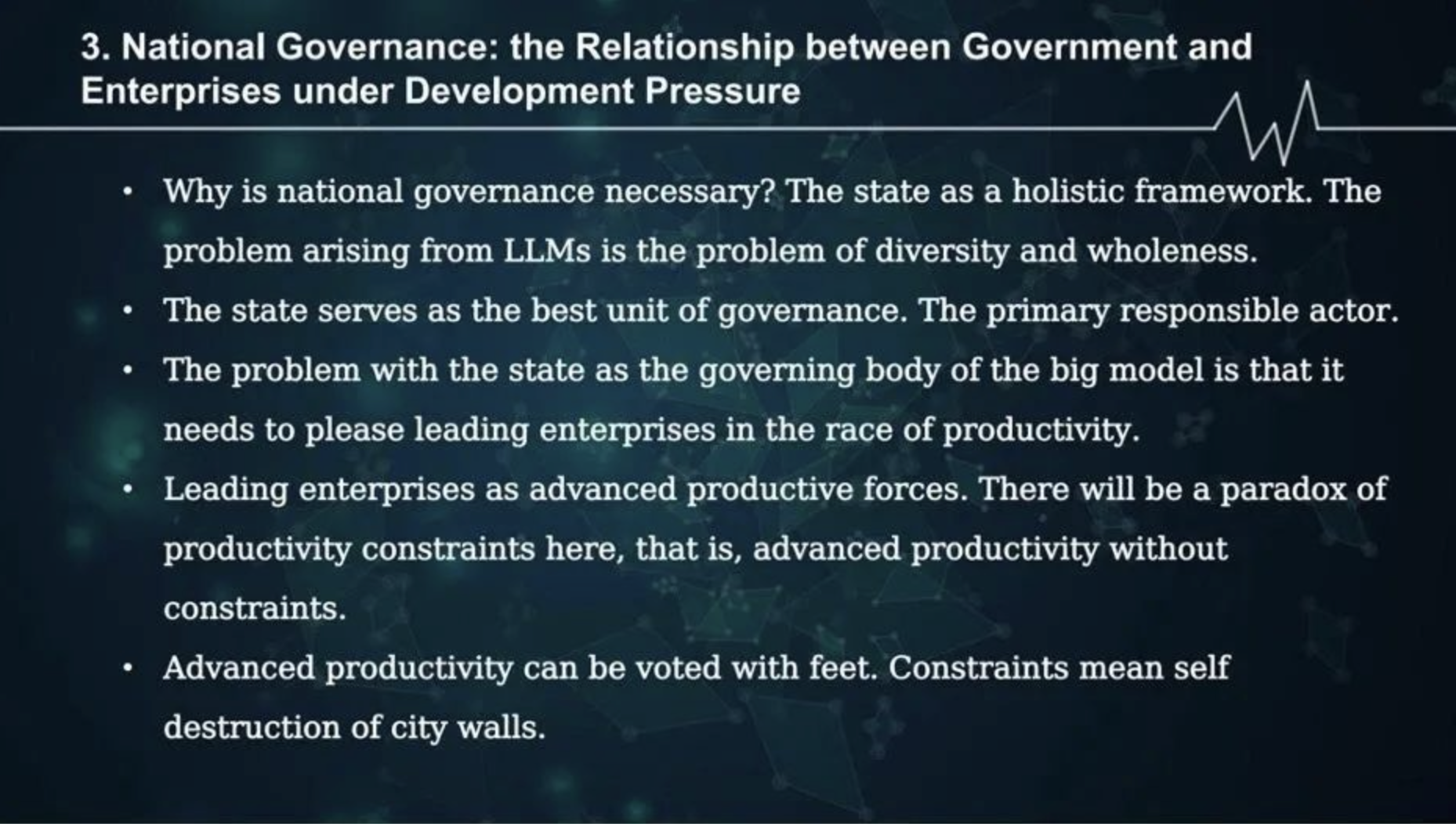

Part 3 National Governance: the Relationship between Government and Enterprises under Development Pressure

The third topic is why national governance is very important. I think the nation or state is the best unit of governance and the primary responsible actor. But the problem with the state is that if you want to win the race of productivity, you have to follow the guidelines of enterprises.

Part.3 国家治理:发展压力下的政企关系

接下来我想强调的是,国家治理也是非常重要的。因为国家是最好的治理单位,是负主要责任的行动者。但国家治理的问题在于,如果你想在生产力竞赛中获胜,你就必须遵循那些企业的规则。

So, there is an asymmetric government-enterprise relationship. So, we talk a lot about regulation, but in practice, regulations are always suspended. But I think that maybe in the earliest stage of AI governance, with social conflicts continuing to escalate, maybe national power should face some adjustment.

可见政府企业关系是不对称的。因此,我们经常谈论国家监管,但在实践中,法规总是被搁置。但我认为在AI治理的早期阶段,社会冲突持续升级,国家权力可能需要做出一些调整。

The greater difficulty in national governance lies in its own insufficient capacity and relatively slow response to changes. Therefore, I believe that national governance should also include other players, such as scientists, social scientists, and active citizens.

国家治理的更大困难在于自身能力不足、应对变化相对缓慢。所以我认为,国家治理应该强调至少有关国家的几个方面。我认为还应该纳入其他参与者,例如科学界和积极公民。

Furthermore, I believe that more corresponding government government positions should be added for the governance of LLMs, and comprehensive third-party audits of models should be formed. I think that audits are very important, as is tracking the weights of LLMs, at least for the huge models, and reporting their computing power.

并且,我认为应该为大模型治理增加更多的相应体制,并进行全面的第三方模型审查。我认为审查是非常重要的,并且审查也要关注大语言模型的算力。

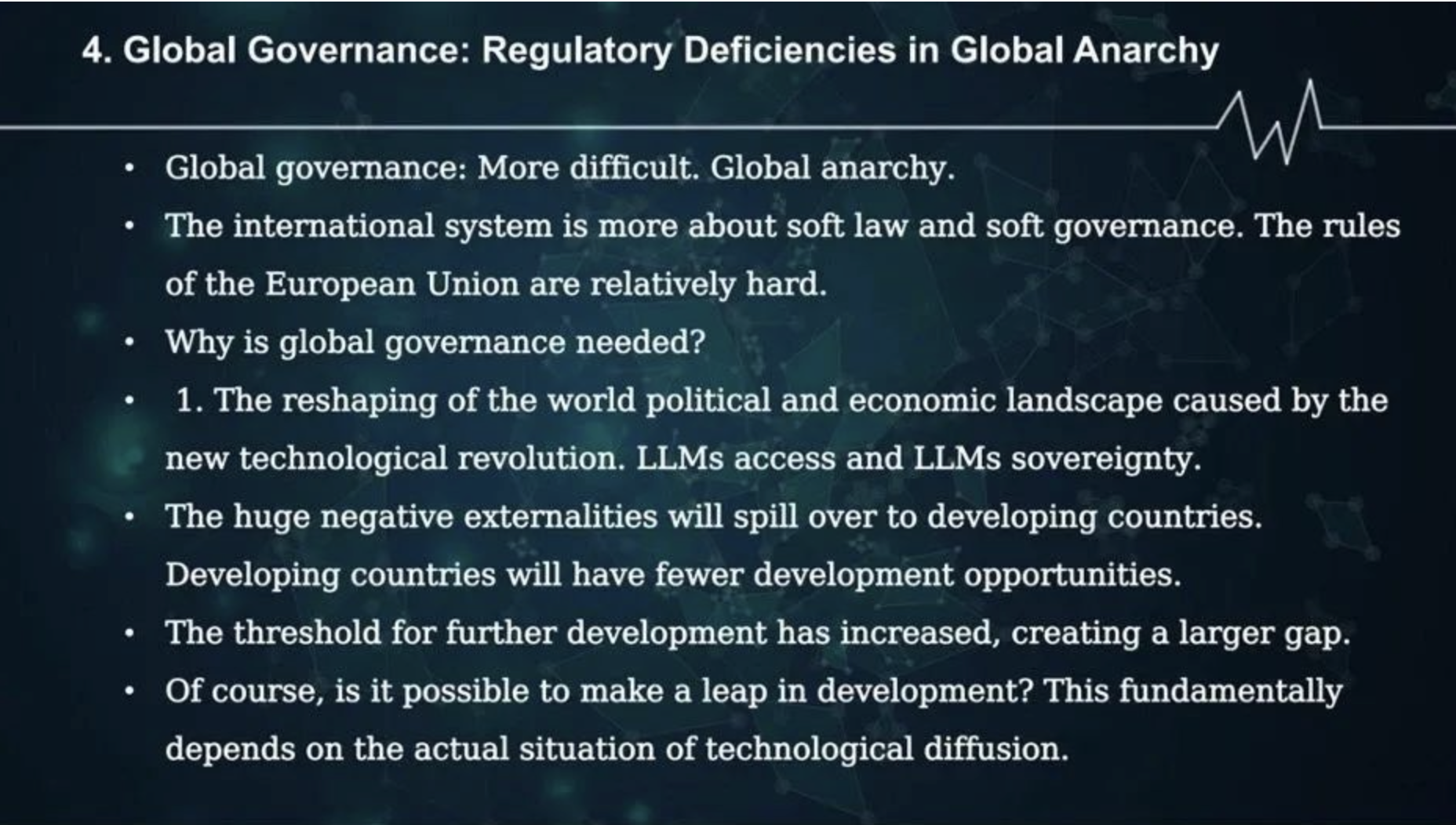

Part 4 Global Governance: Regulatory Deficiencies in Global Anarchy

About global governance, I think there has been a lot of talking about it. I think it is very important, especially for small states. I propose LLMs access and LLMs sovereignty. That's the reason why middle powers have to build their own LLMs. But, there is also a gap for developing countries.

Part.4 全球治理:全球无政府状态下的监管缺陷

关于全球治理,我认为有很多讨论,特别是对于小国家来说,非常重要。我提出了大模型准入和大模型主权的概念,这也是中小国家有必要建立自己的大模型的原因,但这对发展中国家来说也存在一道需要跨越的鸿沟。

And finally, I think international consensus is very crucial. We need to give a clear definition of AGI. I believe that right now, Ilya Sutskever already had this "Oppenheimer moment," and perhaps in the next few years, we may witness the "Hiroshima moment," seeing the real power of AGI. So, I think there are two key questions. First, should we use existing mechanisms or create new ones? Second, which approach should we take? Today, we have discussed the IAEA approach or IPCC approach, as well as bilateral or multilateral mechanisms.

最后,我认为国际共识也是非常重要的,我们需要对AGI给出一个明确的定义。因此,我认为现在我们已经到了“”奥本海默时刻”,也许在接下来的几年里,我们还要迎来“广岛时刻”,看到AGI的真正威力。因此,我认为两个关键问题:我们应当利用现有机制还是创建新机制?我们应当采取哪条路径?今天我们已经讨论了IAEA路径或IPCC路径,以及双边或多边机制。

As I already mentioned, I am very honored to have participated in the article "Managing AI Risks in an Era of Rapid Progress.”

对于《人工智能飞速进步时代的风险管理》(Managing AI Risks in an Era of Rapid Progress)这篇文章,我非常荣幸能参与其中。

Part 5 How to Form a Consensus Governance Architecture for LLMs?

And finally, why is consensus governance crucial? Right now, we are fragmented because we are using a subjective perspective, with individual perspectives and individual interests. Instead, we need a kind of intersubjective perspective. So this is where consensus governance is really from.

Part.5 共识治理:如何构建大模型共识治理架构?

最后,我认为共识治理至关重要。现在我们采用的还是主体性的视角,我们在出于个人利益、进行个人的实践。因此,我们需要一种主体间的视角,这就共识治理。我认为对齐的真正目标应该是人类的对齐。

We talk about alignment. I think the true goal of alignment should be human alignment. We ask machines to align with human values. The problem is that I think the OpenAI team are not aligned. So, the internal order of human society is too far from being aligned.

共识治理需要人类社会的对齐,也需要机器与人类价值观保持一致。问题是,我认为OpenAI团队并没有做到这一点。因此,人类社会离对齐还很遥远。

So why do we need machine alignment? There is recent movement on the topic of RLAIF method, which seems like an option for us, but actually, I'm a little worried about this, since we may delegate too much to the machine.

那么,我们为什么需要机器对齐呢?这是最近关于RLAIF的动态。这是我们的一个选择,但我们似乎把太多的权力下放给了机器,实际上我有点担心。

I think there are two types of alignment, from machine to human alignment and also from human to machine alignment. I don't have enough time to discuss the concept of human negative alignment tax. In the end, I think we should go back to human subjectivity.

所以,我认为有两种对齐方式,一种是机器向人的对齐,一种是人向机器的对齐。我没有足够的时间来谈这个人类的负对齐税这个概念。最终,我认为我们应该回到人类的主体性上来。

Translator’s Notes

1. The original Chinese text says “three points,” but Professor Gao articulated four points in his article.